Inside Meta's Data Flow Discovery

Discover How Meta Tracks Data Journeys to Safeguard User Privacy at Scale

TL;DR

Situation

Meta handles vast amounts of user data across its platforms, requiring strong privacy controls to protect sensitive information. A critical component of this effort is data lineage, which helps trace how data moves across different systems, ensuring compliance with privacy policies like purpose limitation.

Task

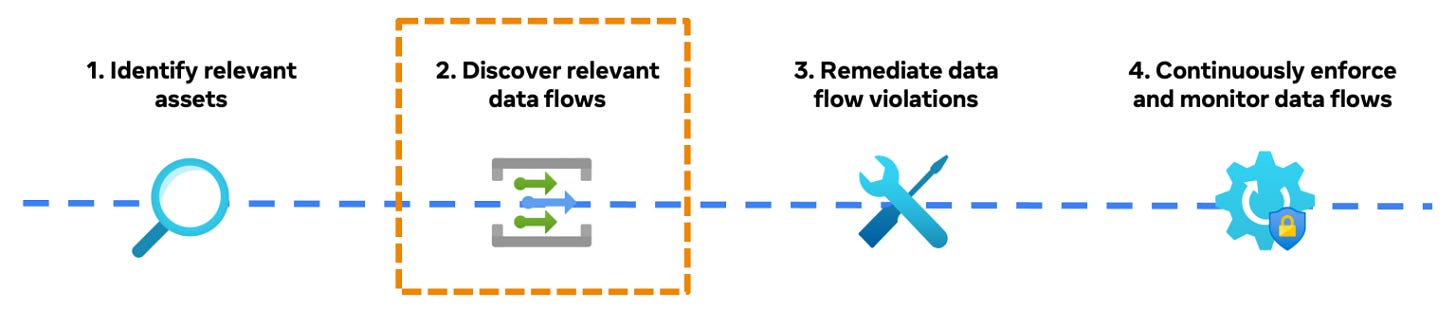

Meta needed a scalable and automated way to track data lineage across millions of assets, including databases, web services, and AI systems. This required moving beyond manual data flow documentation to a more robust, automated discovery process.

Action

Data Flow Collection – Used static code analysis, runtime instrumentation, and input/output matching to track data across stacks (Hack, C++, Python, SQL).

Privacy Probes – Captured real-time runtime signals, identifying how and where sensitive data is logged, stored, or transformed.

Automated Lineage Graphs – Created scalable data flow visualizations to streamline privacy control implementation.

AI & Data Warehouse Integration – Ensured end-to-end traceability across AI models, databases, and batch-processing systems.

Iterative Filtering Tool – Allowed developers to refine lineage graphs, isolating relevant data flows and removing noise.

Result

Meta’s data lineage system reduced engineering time, improved compliance accuracy, and automated privacy enforcement. It enabled developers to quickly identify and secure sensitive data flows while ensuring continuous monitoring at scale. These innovations enhanced user data protection across Meta’s ecosystem

Use Cases

Privacy Enforcement, Compliance Monitoring, Data Lineage

Tech Stack/Framework

Python, SQL, C++, PyTorch, Presto, Spark

Explained Further

Meta's Privacy Aware Infrastructure (PAI) is designed to embed privacy controls within its systems, ensuring user data is handled responsibly. A foundational element of PAI is data lineage, which traces the journey of data across various platforms, providing a comprehensive view of its flow from collection to processing and storage. This capability is crucial for implementing privacy measures like purpose limitation, which restricts data usage to specific, intended purposes

Understanding Data Lineage at Meta

Data lineage involves mapping out how data moves through Meta's vast ecosystem, connecting source assets (e.g., database tables where data originates) to sink assets (e.g., tables or systems where data is stored or processed).

This mapping is essential for:

Keep reading with a 7-day free trial

Subscribe to Data Tinkerer to keep reading this post and get 7 days of free access to the full post archives.