To Build or not to Build AI Agents

Discover Anthropic’s framework for knowing when AI agents add value or when simpler solutions suffice

TL;DR

Definitions:

Workflows: Predefined code paths for LLM-tool orchestration.

Agents: Dynamic systems where LLMs independently decide processes and tool usage.

When to Use Agents:

Use simple LLM calls unless task complexity justifies agentic systems.

Workflows suit predictable tasks; agents work best for flexible, open-ended problems.

Agentic System Patterns:

Augmented LLMs: LLMs with retrieval, tools, and memory.

Prompt Chaining: Tasks split into sequential steps, focusing on higher accuracy over latency.

Routing: Classifies input, directs to specialized tasks, improving task-specific performance.

Parallelization: Splits tasks for speed or multiple perspectives (e.g., sectioning and voting).

Orchestrator-Workers: Dynamic task delegation by a central orchestrator.

Evaluator-Optimizer: Iterative loops for refinement based on evaluation feedback.

Autonomous Agents:

Ideal for unpredictable, multi-step tasks requiring flexibility and trust in decision-making.

Demand rigorous testing, sandboxing, and human oversight to mitigate costs and errors.

Best Practices:

Keep designs simple and transparent.

Focus on crafting and testing effective tool interfaces.

Combine and customize patterns based on specific use cases.

Explained Further

Simple Wins

The most effective LLM agents focus on simplicity. Rather than relying on intricate frameworks or highly specialized libraries, successful implementations use modular, composable patterns that prioritize clarity and maintainability. Starting with straightforward LLM API calls allows developers to iterate quickly and understand core functionality before layering in complexity.

Definitions

Anthropic distinguishes between two types of agentic systems: workflows and agents.

Workflows: Predefined processes where LLMs operate within rigid code paths. Ideal for predictable tasks, workflows deliver consistency and reliability.

Agents: Dynamic systems where LLMs decide how to complete tasks and which tools to use. These systems prioritize flexibility and autonomy, making them better suited for open-ended, complex problems.

When to Use Agents

Choosing when to implement agentic systems depends on balancing simplicity, cost, and task performance.

For many tasks, a single LLM call augmented with retrieval or examples suffices.

Workflows shine when tasks are well-defined and predictable, offering efficiency and consistency.

Agents excel in dynamic environments where flexibility is essential, such as handling unstructured inputs or scaling tasks across domains.

Agentic System Patterns

Agentic systems often combine foundational building blocks and workflows. Below are key patterns Anthropic recommends:

Building Block: Augmented LLMs

The foundation of any agentic system, augmented LLMs incorporate tools, retrieval capabilities, and memory.

Best Practice: Tailor augmentations to specific use cases and ensure interfaces are well-documented.

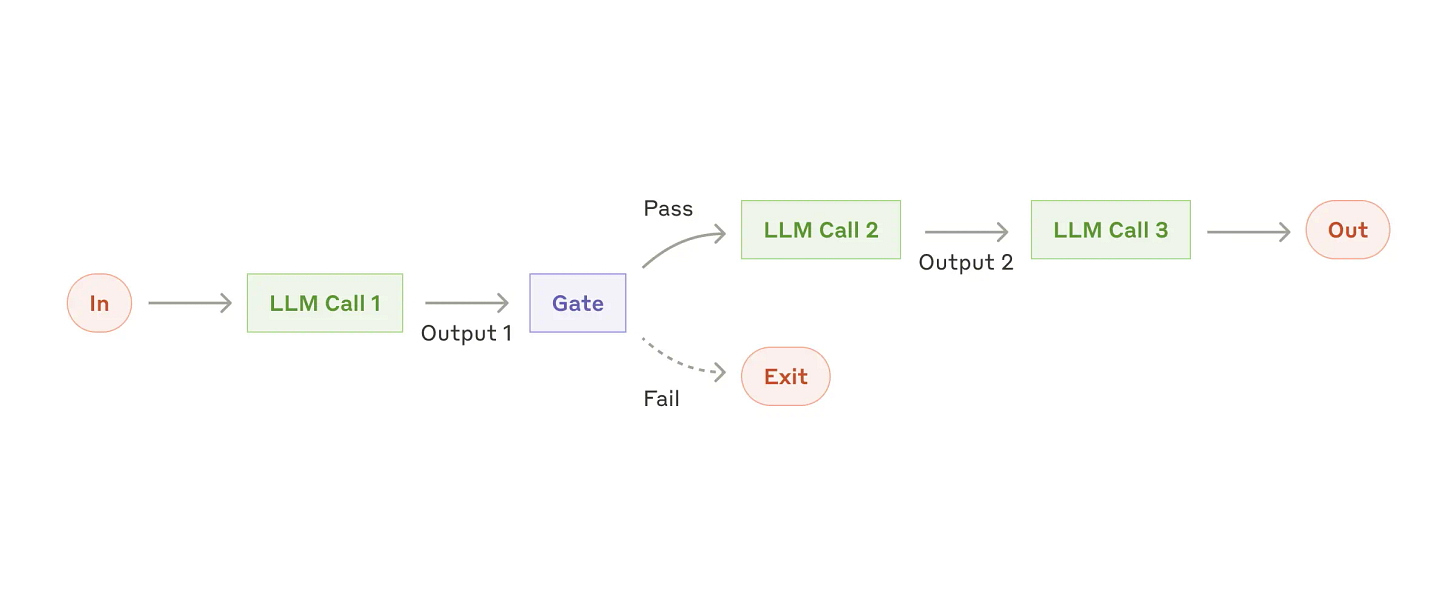

Workflow: Prompt Chaining

Brief Explanation: This pattern breaks down a task into sequential, manageable steps, where each LLM output serves as input for the next. This structure enhances accuracy at the expense of latency.

Use Case: Tasks requiring intermediate steps to ensure quality or validation at different stages.

Example: Writing marketing copy, verifying it against criteria, and translating it into another language. Each step is optimized for accuracy before passing to the next.

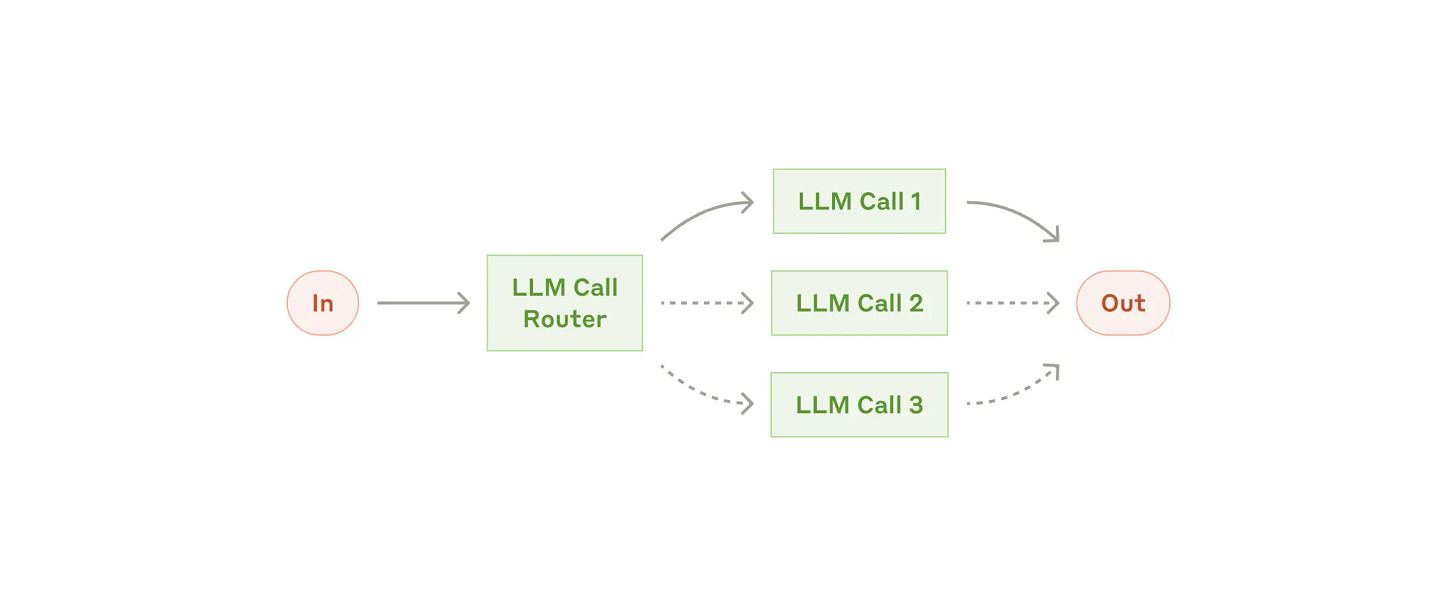

Workflow: Routing

Brief Explanation: Inputs are classified and directed to specialized workflows or processes based on their category, enabling separation of concerns and customized handling.

Use Case: Scenarios where inputs can be categorized into distinct types requiring different handling.

Example: Customer service workflows where general questions, refund requests, and technical support tickets are routed to specific processes.

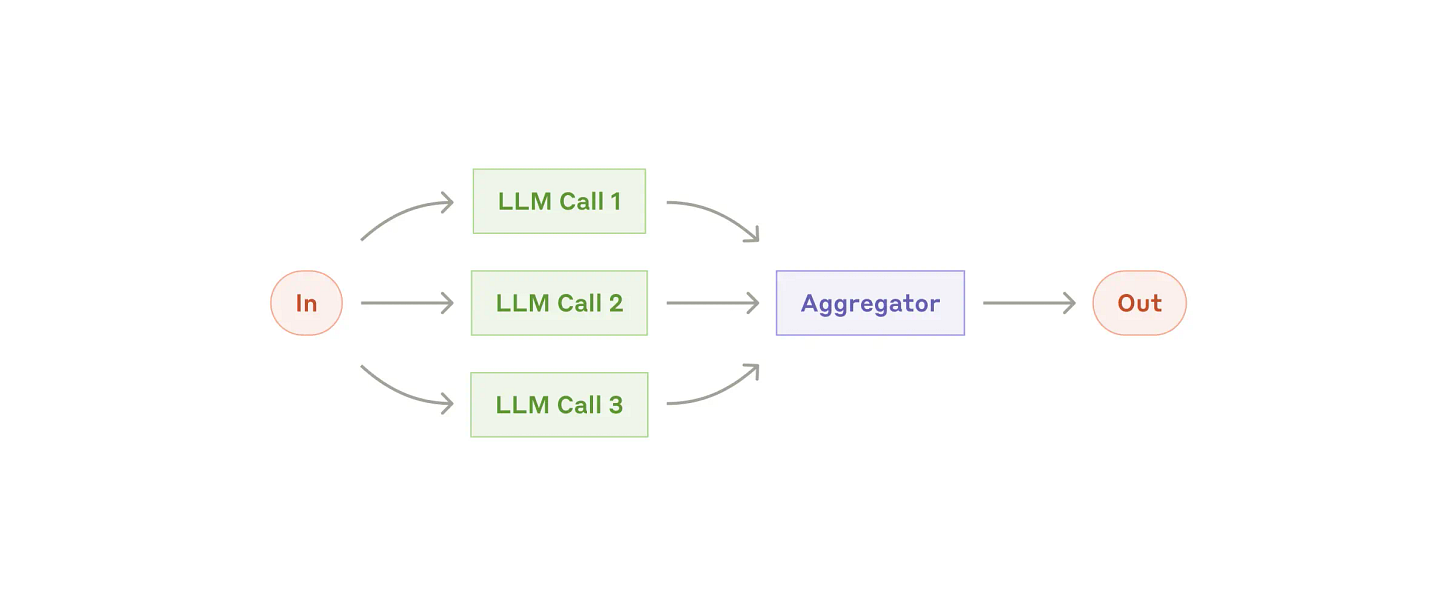

Workflow: Parallelization

Brief Explanation: Tasks are divided into independent subtasks for parallel execution or run multiple times to generate diverse perspectives. This can speed up tasks or improve confidence in outputs.

Use Case: Scenarios that benefit from task division for speed or multiple evaluations for validation.

Examples:

Sectioning: Implementing guardrails where one model screens for inappropriate content while another handles the query.

Voting: Running multiple reviews of a piece of code with different prompts to flag vulnerabilities and enhance reliability.

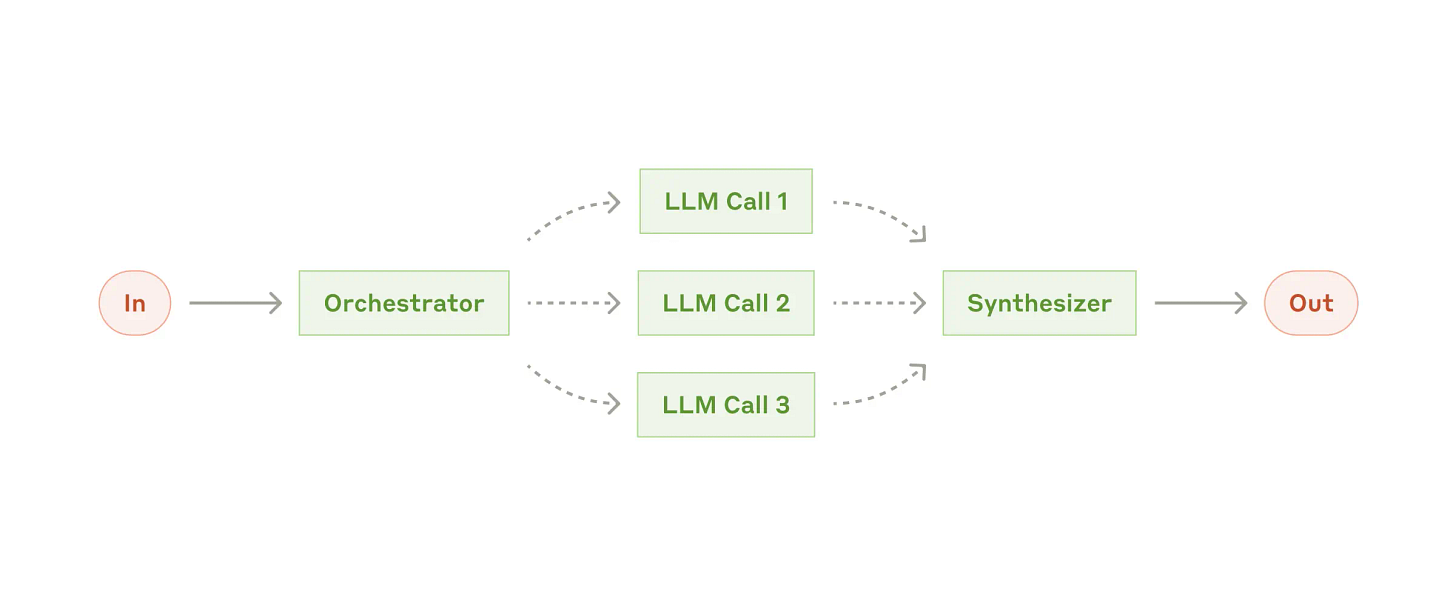

Workflow: Orchestrator-Workers

Brief Explanation: A central orchestrator LLM dynamically determines and assigns subtasks to worker LLMs, synthesizing their outputs for the final result. This provides flexibility for unpredictable tasks.

Use Case: Complex, multi-step tasks where subtasks are unknown at the outset and need to be determined dynamically.

Example: Coding agents that dynamically identify and modify multiple files to implement requested changes, with the orchestrator assigning and coordinating worker tasks.

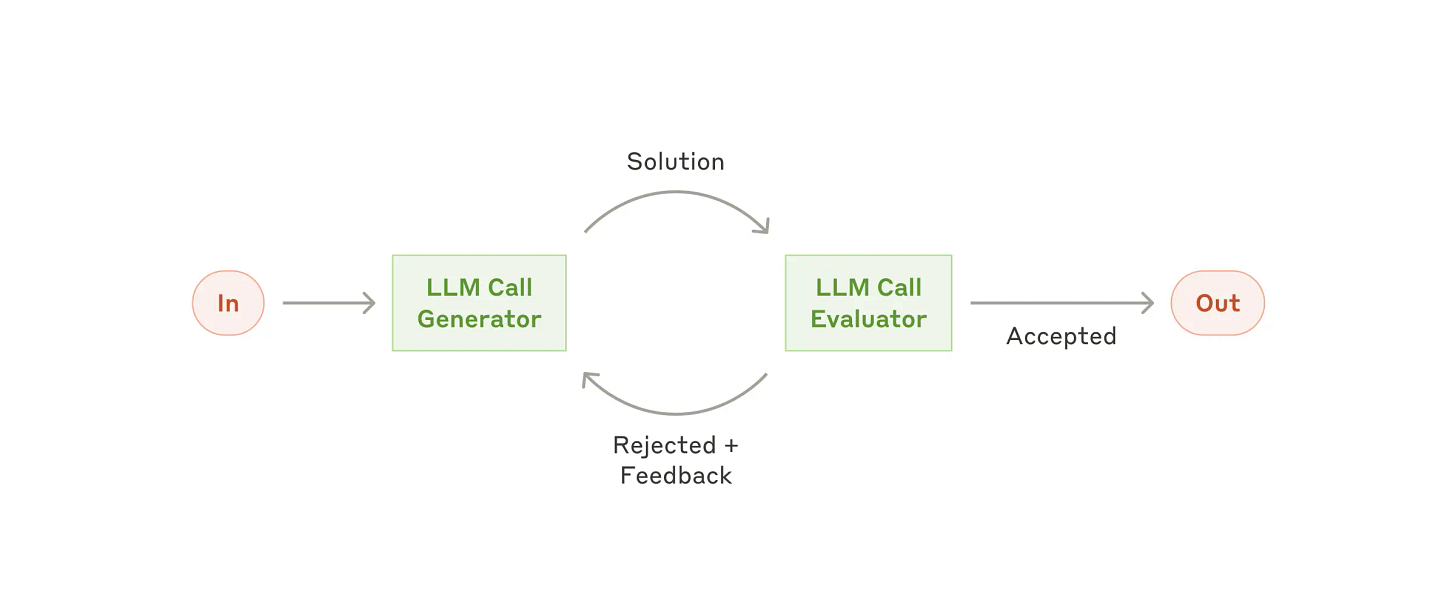

Workflow: Evaluator-Optimizer

Brief Explanation: One LLM generates an output, while another evaluates and provides feedback for iterative improvement. This mirrors human editing processes and produces refined results over multiple iterations.

Use Case: Tasks where iterative feedback significantly improves the final outcome.

Example: Literary translation tasks where nuances may be missed in the first iteration. An evaluator LLM critiques and refines the translation until it meets all requirements.

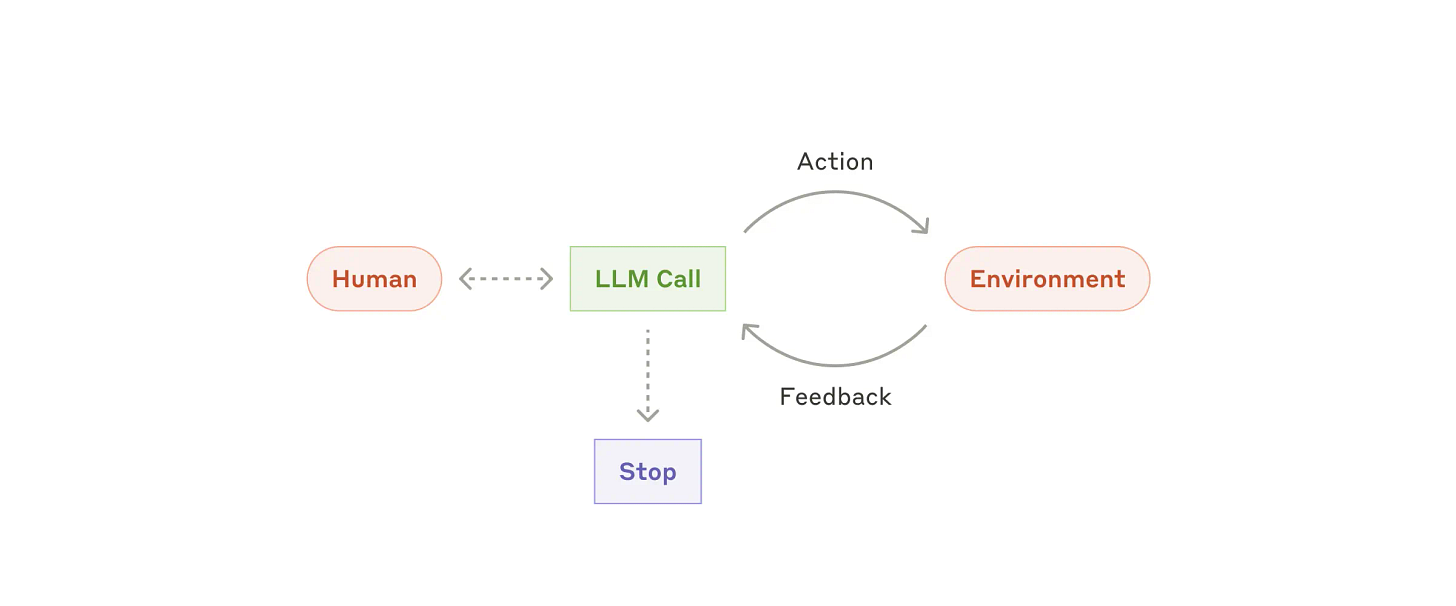

Autonomous Agents

Brief Explanation: Autonomous agents plan and execute tasks independently, leveraging tools, reasoning, and feedback loops. They are suited for open-ended, multi-step problems requiring adaptability and flexibility.

Use Case: Handling complex tasks where predefined workflows cannot anticipate all steps or outcomes.

Examples:

Coding Agents: Solve SWE-bench tasks by analyzing requirements, editing multiple files, and verifying results through automated tests.

Computer Use: Perform dynamic tasks like retrieving, analyzing, and executing commands using external tools and systems.

These agents require rigorous testing, guardrails, and cost-efficiency strategies to ensure reliability and prevent errors from compounding.

Best Practices

Building effective agents requires thoughtful design and testing. Anthropic emphasizes three principles for successful agent development:

Simplicity: Avoid overengineering; start small and scale complexity only when necessary.

Transparency: Make agents’ reasoning and planning steps visible to users.

Agent-Computer Interface (ACI): Invest in clear tool documentation and iterative testing to ensure seamless integration.

The Full Scoop

For a deeper dive into Anthropic’s guideline for building effective agents, check the original article on Anthropic website